The InfoQ Trends Reports provide comprehensive insights into the evolving spheres of AI, ML, and Data Engineering for InfoQ readers. This summary encapsulates discussions from the InfoQ editorial team’s podcast featuring external experts, focusing on the upcoming trends in AI and ML for the next year. Alongside the report and trends graph, our accompanying podcast delves deep into these topics.

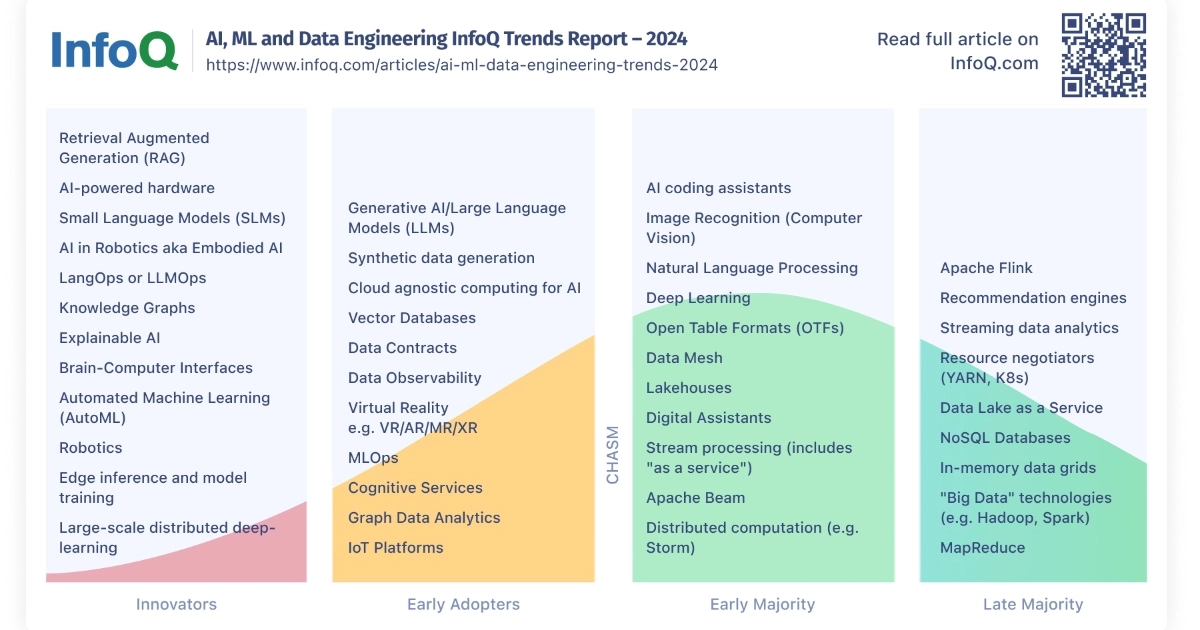

An integral element of the annual trends report is the trends graph. This graph delineates which trends and topics have reached the innovators stage and which ones have advanced to the early adopters and early majority categories. These categories draw inspiration from Geoffrey Moore’s “Crossing the Chasm.” At InfoQ, our focus primarily centers on categories that are yet to cross the chasm. Here is this year’s graph:

Since last year, AI technologies have undergone substantial innovations, which were discussed in InfoQ’s trends report.

The article underscores the trends graph showcasing various stages of technology adoption and presents detailed information on the technologies that have been newly added or updated from the previous year’s trends report. Furthermore, we explore which technologies and trends have ascended in the adoption graph.

Here are some highlights of what has changed since last year’s report:

We’ll begin with recent additions to the Innovators category. Crucial for businesses that prefer not to rely on cloud-based LLM providers are Retrieval Augmented Generation (RAG) techniques. These techniques are similarly critical for scalable, practical applications of LLMs.

Also newly featured in the Innovators category is the integration of AI in hardware, spanning AI-enhanced GPU setups and AI-enabled PCs, mobile devices, and edge devices. This area is expected to undergo considerable growth in the upcoming year.

While LLM-based platforms encounter obstacles related to infrastructure deployment and operational costs, there’s movement towards adopting Small Language Models (SLMs). These models are ideally suited for edge computing scenarios on compact devices. Companies like Microsoft have launched products such as Phi-3 and other SLMs, which the community is encouraged to evaluate immediately to determine the cost-effectiveness and advantages of SLMs over LLMs.

With the rapid advancements in Generative AI technologies and the introduction of new Large Language Models (LLMs) such as GPT-4o from OpenAI, LLAMA3 from Meta, and Google’s Gemma, it is appropriate to elevate the topic of “Generative AI / Large Language Models (LLMs)” from the Innovators group to the Early Adopters segment.

Similarly, the use of Synthetic Data Generation is growing as more companies extensively incorporate it in training their models, making it another candidate advancing to the Early Adopters category.

The adoption of AI coding assistants is increasingly prominent in enterprise software development environments, justifying its movement to the Early Majority category.

In various industrial settings, image recognition technologies are increasingly utilized for tasks such as defect inspection and detection, aiding in preventive maintenance to substantially reduce or avert equipment failures.

ChatGPT was launched in November 2022. Since its inception, the fields of Generative AI and Large Language Models have progressed rapidly, showing no signs of slowing down in innovation.

Key technology companies have been actively launching various AI-driven products.

At the Google I/O Conference earlier in the year, Google revealed numerous updates including enhancements to Google Gemini and introducing “Generative AI in Search,” poised to revolutionize our interaction with search technologies.

Simultaneously, OpenAI introduced GPT-4o—an advanced “omni” model capable of processing audio, visual, and textual content simultaneously in real-time.

Meta recently unveiled LLAMA3 alongside the latest version, LLAMA 3.1, which boasts 405 billion parameters. Open-source Large Language Models (LLMs) such as OLLAMA are gaining significant traction.

The AI and ML industry continues to evolve with major companies releasing new versions of large language models, including GPT-4o, LLAMA3, and Gemini. Additionally, companies such as Anthropic with Claude, and Mistral with Mixtral, contribute to the expanding range of LLMs available.

A growing trend within LLM technology involves the expansion of context length—the volume of data each model can process per query. Mandy Gu highlighted this development in this year’s podcast, noting significant enhancements:

“It’s definitely a trend that we’re seeing with longer context windows. Initially, the limited data input was a major challenge for using LLMs effectively. Earlier this year, Gemini, a foundational model by Google, introduced a context window of one million, significantly altering the landscape. This groundbreaking change has encouraged other providers to extend their context window capacities.”

Another major trend in language model evolution is the new small language models. These specialized language models offer many of the same capabilities found in LLMs but are smaller, trained on smaller amounts of data, and use fewer memory resources. Small language models include Phi-3, TinyLlama, DBRX, and Instruct.

With several LLM options available, how do we compare different language models to choose the best model for different data or workloads in our applications? LLM evaluation is integral to successfully adopting AI technologies in companies. There are LLM comparison sites and public leaderboards like Huggingface’s Chatbot Arena, Stanford HELM, Evals framework and others.

The InfoQ team recommends that the LLM app developers use domain/use-case-specific private evaluation benchmarks to track changes in model performance. Use the human-generated ones, ideally those that have not yet been polluted/leaked into LLM training data, to help provide an independent measure of model quality over time.

From the podcast,

“Business value is something important we should think about for evaluation. I’m also quite skeptical of these general benchmarking tests, but I think what we should really do is…evaluate the LLMs, not just the foundational models, but the techniques and how we orchestrate the system at the task at hand. So if, for instance, the problem I’m trying to solve is…to summarize a research paper [and] I’m trying to distill the language, I should be evaluating the LLMs capabilities for this very specific task because going back to the free lunch theorem, there’s not going to be one set of models or techniques that’s going to be the best for every task.”

The AI-enabled agent programs are another area that’s seeing a lot of innovation. Autonomous agents and GenAI-enabled virtual assistants are coming up in different places to help software developers become more productive. AI-assisted programs can enable individual team members to increase productivity or collaborate with each other. Gihub’s Copilot, Microsoft Teams’ Copilot, DevinAI, Mistral’s Codestral, and JetBrains’ local code completion are some examples of AI agents.

GitHub also recently announced its GitHub Models product to enable the large community of developers to become AI engineers and build with industry-leading AI models.

To quote Roland Meertens from the podcast,

“We see some things like, for example, Devin, this AI software engineer where you have this agent which has a terminal, a code editor, a browser, and you can basically assign it as a ticket and say, ‘Hey, try to solve this.’ And it tries to do everything on its own. I think at the moment Devin had a success rate of maybe 20%, but that’s pretty okay for a free software engineer.”

Daniel Dominguez mentioned that Meta will offer a new Meta AI agent for small businesses to help small business owners automate a lot of things in their own spaces. HuggingChat also has AI agents for daily workflows. Slack now has AI agents to help summarize conversations, tasks, or daily workflows.

AI-integrated hardware is leveraging the power of AI technologies to revolutionize the overall performance of every task. AI-enabled GPU infrastructure like NVIDIA’s GeForce RTX and AI-powered PCs like Apple M4, mobile phones, and edge computing devices, can all help with faster AI model training and fine-tuning as well as faster content creation and image generation.

With all the developments in Gen AI and Language Models, safely and securely deploying these AI applications is crucial to protect consumer and company data privacy and security.

As multi-model language models like GPT-4o gain popularity, paying close attention to privacy and security with non-textual data such as videos is crucial in the machine learning and DevOps ecosystems.

During a recent podcast, an expert in AI safety and security emphasized the importance of establishing robust data control and security measures. Recommendations included instilling a comprehensive understanding of data lineage, training staff on data privacy practices, simplifying secure processes for broader adoption, and ensuring systems are designed with auditability to track interactions and inferences effectively. The importance of assessing potential security weaknesses and risks, such as prompt injections in the design workflow, was also discussed.

Another significant topic is the operational aspects of hosting and maintaining language models in production environments. Known collectively as LangOps or LLMOps, these operational practices are vital for the sustained support of language models throughout their lifecycle.

Mandy Gu described her experiences with LLMOps at her company, providing insights into the practical aspects of maintaining language models in a commercial setting:

“We initiated the process by integrating self-hosted models, allowing for the incorporation of open-source models which we then fine-tune. These enhanced models are incorporated into our platform, becoming accessible for both our systems and users via the LLM gateway. Progressively, we developed a reusable API for retrieval and enhanced our vector database’s accessibility. As we continued to expand these platform components, our primary users—scientists, developers, and business professionals—began to experiment and identify workflows that could significantly benefit from LLMs. It is at this point that we step in to help them productionize and deploy these solutions at scale.”

Due to the limited duration of the podcast, the conversation could not accommodate a discussion on the latest AR/VR trends, yet it remains a topic of high relevance that we wish to touch upon herein.

Augmented Reality and Virtual Reality technologies stand to gain immensely from the latest advancements in AI. Apple and Meta have recently unveiled their VR offerings: Apple Vision Pro, Meta Quest Pro + Meta Quest 3, and Ray-Ban Meta, enhancing application development and user experiences by harnessing the capabilities of AI and language models.

The future of AI boasts openness and accessibility. Although the majority of language models today are proprietary, the trend is shifting towards more open-source alternatives. This year, the focus on Retrieval Augmented Generation (RAG) is likely to intensify, particularly regarding its applications in scalable LLMs. AI-incorporated hardware such as PCs and edge devices will receive increased focus. Smaller language models (SLMs) are poised for greater exploration and uptake, particularly beneficial for edge computing scenarios on compact devices. Concurrently, the attention to security and safety within AI applications will persist as a critical aspect of language models’ lifecycle management.

Welcome to DediRock, your trusted partner in high-performance hosting solutions. At DediRock, we specialize in providing dedicated servers, VPS hosting, and cloud services tailored to meet the unique needs of businesses and individuals alike. Our mission is to deliver reliable, scalable, and secure hosting solutions that empower our clients to achieve their digital goals. With a commitment to exceptional customer support, cutting-edge technology, and robust infrastructure, DediRock stands out as a leader in the hosting industry. Join us and experience the difference that dedicated service and unwavering reliability can make for your online presence. Launch our website.